Last week we were trying to setup a 2 Node 10g RAC System on Linux with openfiler used for shared storage. We were using the article written by Jeffery Hunter. This was not the first time I was doing it, but by mistake we chose the machine with 500Mb memory to be used for one of the RAC system and used a 1Gb memory machine for Openfiler. We carried on with the installation though cluvfy and runInstaller gave us warnings regarding the same.

But once the installation completed, I found the database was shutting down frequently with “PMON Failed to acquire Latch”. I tried to debug it, but was not able to figure out anything from Systemstate dump which was generated.

Anyways we decided to rebuild the system.So we decided to cleanup the Machine 2 and meanwhile re-installed the openfiler. I was not having the OEL Cd’s on that day,so couldn’t build the Machine 1. So I went ahead with cleaning Machine 2 for re-installing software. I saw an opportunity of setting up a single node RAC and then adding another node. I followed below steps for cleaning the RAC installation

1)Stop the Nodeapps and Clusterware

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">srvctl stop nodeapss -n</span>

This will shutdown the Database,ASM instance and also the nodeapps. After this , you can stop the clusterware.

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">#crsctl stop crs</span>

Please note that you can directly stop the clusterware (while cleaning up) as this will automatically stop the dependent resources.

2) Remove the installation files and other related files

I had installed CRS in /u01/app/crs and Database home was located in /u01/app/oracle. So I removed both the directories.

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">rm -rf /u01/app/crs rm -rf /u01/app/oracle</span>

Note that if you are having multiple oracle database installation, then ensure that you do not remove orainventory directoy or any other ORACLE_HOME. In my case this was the only installation. Remove following files related to clusterware

<span style="font-size: small; font-family: arial,helvetica,sans-serif;"> rm /etc/oracle/*

rm -f /etc/init.d/init.cssd

rm -f /etc/init.d/init.crs

rm -f /etc/init.d/init.crsd

rm -f /etc/init.d/init.evmd

rm -f /etc/rc2.d/K96init.crs

rm -f /etc/rc2.d/S96init.crs

rm -f /etc/rc3.d/K96init.crs

rm -f /etc/rc3.d/S96init.crs

rm -f /etc/rc5.d/K96init.crs

rm -f /etc/rc5.d/S96init.crs

rm -Rf /etc/oracle/scls_scr

rm -f /etc/inittab.crs

cp /etc/inittab.orig /etc/inittab

rm -f /var/tmp/.oracle</span>

Also remove the OCR and Voting disk files. In my case it was stored in OCFS2 filesystem /u02/oradata/orcl. In case it is on raw devices , you can remove it using dd command. Remove ocr.loc file present in /etc/oracle

You can also refer to Note:239998.1 – 10g RAC: How to Clean Up After a Failed CRS Install

In our case as we were re-installing after successful installation. So we even had to clean the ASM disks. They can be again cleaned up by formatting the header with dd command.

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">dd if=/dev/zero of=/dev/sdb bs=1024 count=100</span>

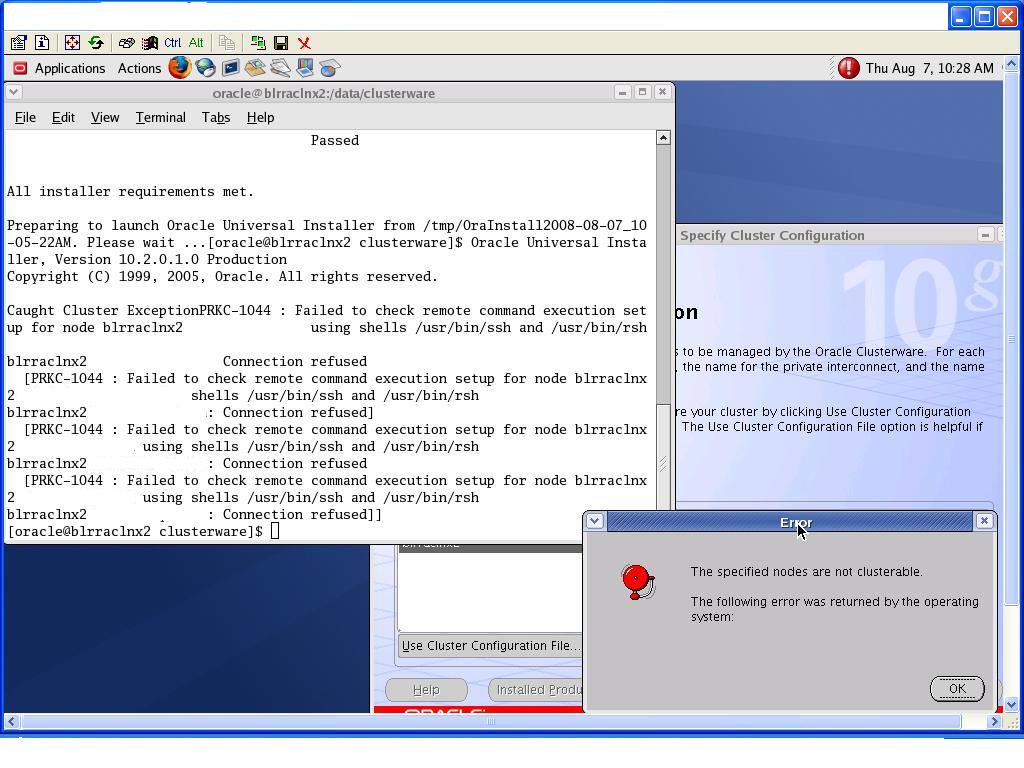

As we were removing the other node and had to reconfigure SSH, I removed /home/oracle/.ssh directory. I didn’t reconfigure SSH again thinking that as it will not be required for single node install. I restarted the Clusterware install and encountered following error

“The Specified nodes are not clusterable”

In another window, one more error was reported, which actually made it clear where the problem was

“Failed to check remote command execution setup for node <nodename> shells /us/bin/ssh and /usr/bin/rsh”

Screenshot for the error can be seen below

Above error clearly states that error was due to unavailability of ssh or rsh. After this I did setup for ssh for single node and tested this too to avoid any further errors.

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">$ ssh blrraclnx2 date Sun Aug 10 14:32:29 EDT 2008</span>

Anyways all these errors could have been avoided, had I used cluvfy utility as below

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">$./runcluvfy.sh stage -pre crsinst -n blrraclnx2 -verbose</span>

Learning:- Always use Cluvfy utility to ensure all pre-requisites are met before installing RAC components

Thomas Sabo

Thanks for sharing your great post,wish you have a nice day,happy every day!

amuhindu

Thanks for sharing the information.Nodes may be created to called a Sub nodes.

online psychic readings

Facebook Like

Hey there, thank you for the share you have been a great resource..