Oracle Goldengate supports Oracle to PostgreSQL migrations by supporting PostgreSQL as a target database, though reverse migration i.e PostgreSQL to Oracle is not supported. One of the key aspect of these database migrations is initial data load phase where full tables data have to copied to the target datastore. This can be a time consuming activity with time taken to load varying based on the table sizes. Oracle suggests to use multiple Goldengate processes to improve the database load performance or to use native database utilities to perform faster bulk-loads.

To use a database bulk-load utility, you use an initial-load Extract to extract source records from the source tables and write them to an extract file in external ASCII format. The file can be read by Oracle’s SQL*Loader, Microsoft’s BCP, DTS, or SQL Server Integration Services (SSIS) utility, or IBM’s Load Utility (LOADUTIL).

Goldengate for PostgreSQL doesn’t provide native file loader support like bcp for MS SQL and sqlloader for Oracle. As an alternative, we can use FORMATASCII option to write data into csv files (or any custom delimiter) and then load them using PostgreSQL copy command .This approach is not automated approach and you will have to ensure that all files are loaded into target database.

In this post, we will evaluate 2 approaches i.e using Multiple replicat Processes and using ASCII dump files with PostgreSQL copy command to load data and compare their performance. Below diagram shows both the approaches

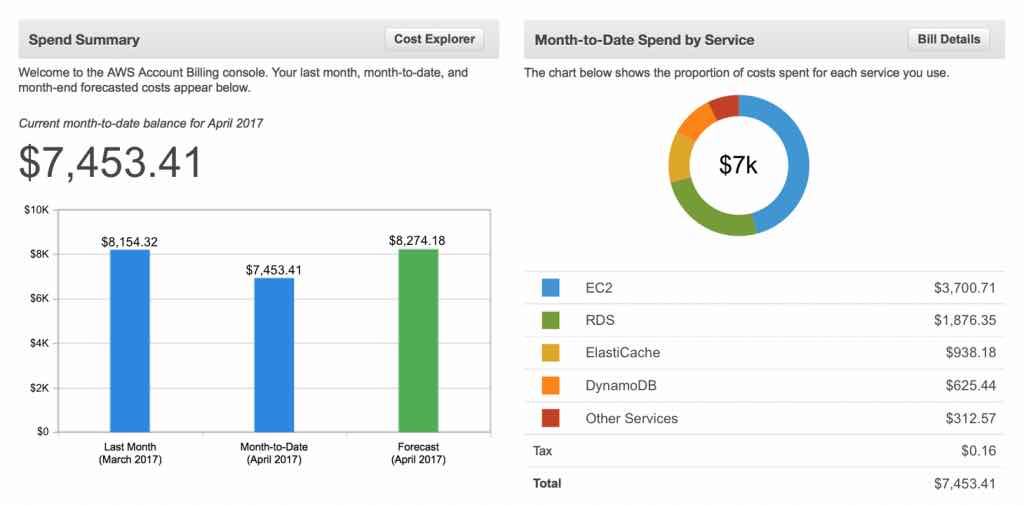

To compare the scenarios, I created a test table with 200M rows(12GB) and used a RDS PostgreSQL instance (db.r3.4xlarge with 10k PIOPS)

CREATE TABLE scott.Big_table (

id NUMBER,

small_number NUMBER(5),

big_number NUMBER,

short_string VARCHAR2(50),

created_date DATE,

CONSTRAINT big_table_pkey PRIMARY KEY (id)

) tablespace LRG_TBSP;

--create sequence for PK

create sequence scott.big_table_seq start with 1 increment by 1 cache 500 nomaxvalue;

-- Load data

INSERT /*+ APPEND */ INTO scott.Big_table

SELECT scott.big_table_seq.nextval AS id,

TRUNC(DBMS_RANDOM.value(1,5)) AS small_number,

TRUNC(DBMS_RANDOM.value(100,10000)) AS big_number,

DBMS_RANDOM.string('L',TRUNC(DBMS_RANDOM.value(10,50))) AS short_string

TRUNC(SYSDATE + DBMS_RANDOM.value(0,366)) AS created_date

FROM dual

CONNECT BY level <= 10000;

COMMIT;

INSERT /*+ APPEND */ INTO scott.Big_table

SELECT scott.big_table_seq.nextval AS id,

small_number,

big_number,

short_string,

long_string,

created_date

FROM scott.Big_table;

COMMIT;

--PostgreSQL Table

CREATE TABLE booker.Big_table (id bigint,

small_number int,

big_number bigint,

short_string VARCHAR(50),

created_date TIMESTAMP,

CONSTRAINT big_table_pk PRIMARY KEY (id) )

Approach 1 : Using Oracle Goldengate multiple replicat processes to load data

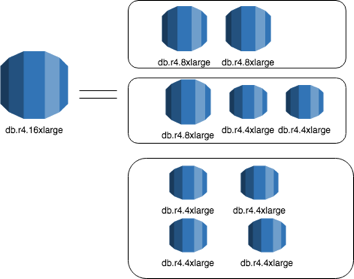

In this approach, I used multiple Oracle Goldengate Replicat processes (8) using @range filter to load data into PostgreSQL.

We were able to get 5k inserts/sec per thread and were able to load the table in ~88 mins with 8 replicat processes.

One key point to remember is that if you are working with EC2 and RDS databases, you should have EC2 machine hosting trail files and RDS instance in same AZ. During the testing, we noticed that insert rate dropped drastically (~800 insert per sec) when using cross AZ writes. Below is replicat parameter file used for performing data load.

SpecialRUN

END Runtime

SETENV (NLSLANG=AL32UTF8)

SETENV (NLS_LANG="AMERICAN_AMERICA.AL32UTF8")

SETENV ( PGCLIENTENCODING = "UTF8" )

SETENV (ODBCINI="/opt/app/oracle/product/ggate/12.2.0.1/odbc.ini" )

TARGETDB GG_Postgres, USERIDALIAS pguser

Extfile /fs-a01-a/databases/ggate/initload/i5

HANDLECOLLISIONS

DISCARDFILE /opt/app/oracle/product/ggate/12.2.0.1/direrr/rinit1.dsc, APPEND, megabytes 20

reportcount every 60 seconds, rate

BATCHSQL;

MAP scott.big_table, TARGET scott.big_table, FILTER (@RANGE (1,8));;

You will need to create additional replicat process files by making change to the range clause.e.g FILTER (@RANGE (2,8)), FILTER (@RANGE (3,8)), etc.

Approach 2: Data load using PostgreSQL copy command

In second approach, we used parameter file with FORMATASCII option(refer to below snippet) for creating a Goldengate Extract process which dumped the data with ‘|’ delimiter and then used PostgreSQL copy command to load data from these dump files.

Extract Parameter file

SOURCEISTABLE

SETENV (ORACLE_SID=ggpoc)

SETENV (NLSLANG=AL32UTF8)

SETENV (NLS_LANG="AMERICAN_AMERICA.AL32UTF8")

SETENV (ORACLE_HOME=/opt/app/oracle/product/11.2.0.4/A10db)

SETENV (TNS_ADMIN=/opt/app/oracle/local/network/)

USERIDALIAS gguser

RMTHOST xx.222.xx.78, MGRPORT 8200, TCPBUFSIZE 100000, TCPFLUSHBYTES 300000

RMTHOSTOPTIONS ENCRYPT AES256

FORMATASCII,NOHDRFIELDS,NOQUOTE,NONAMES, DELIMITER '|'

RMTFILE /fs-a01-a/databases/ggate/initload/i4 , megabytes 1000

reportcount every 60 seconds, rate

TABLE scott.BIG_TABLE;

With above parameter file, Goldengate Extract process would send data to remote system and store the data in dump files. These files are then loaded into PostgreSQL using \copy command.

psql> \copy scott.big_table from '/fs-a01-a/databases/ggate/initload/i4000000' with DELIMITER '|';

Data load took 21 mins, which is nearly 4x faster than initial approach. If you remove the Primary key index, then it drops the time taken to ~9 mins to load 200M POC table.

Update:

Oracle GoldenGate 19.1 comes with DataDirect 7.1 PostgreSQL Wire Protocol ODBC driver for PostgreSQL connectivity. You can now add a parameter “BatchMechanism=2” to speed up the inserts. After setting this parameter, odbc driver will start batching inserts into memory buffer and will insert them together instead of single row inserts. You can find details here

To add the parameter, update odbc.ini and add BatchMechanism under the database section

[apgtarget]

Driver=/oracle/product/ggate/19.1.0.0/lib/GGpsql25.so

Description=DataDirect 7.1 PostgreSQL Wire Protocol

BatchMechanism=2

Database=pgbench

HostName=xxx.xxxxxx.us-east-1.rds.amazonaws.com

PortNumber=5432

In my testing, I saw that insert rate increased from 5.3K rows per second to 35K i.e nearly 7x increase. I also noticed that WriteIOPS on this Aurora Instance increased from 20K to 80-100K IOPS

Recent Comments