Continuing my experiments with our 2 Node 10g RAC Test system, I carried out upgrade of Oracle Clusterware and Oracle RAC Database from 10.2.0.1 to 10.2.0.4. I have tried to document the steps for upgrading Oracle Clusterware(Rolling Upgrade) and RAC Database upgrade in this post. In case you observe any mistakes, please let me know

First step is to download the 10.2.0.4 Patchset from metalink. In our case ,we downloaded Patch 6810189 (10g Release 2 (10.2.0.4) Patch Set 3 for Linux x86). You can follow Patch Readme for detailed steps.

We will be doing Rolling upgrade for Oracle Clusterware i.e we will only bring one node down for patching while other node will be available and accepting database connections. Before you start the process, take backup of following so as to restore it in case of failed upgrade

a) Full OS backup (as some binaries are present in /etc ,etc)

b) Full Database Backup (Cold or hot backup)

c) Backup of OCR and voting disk

Let’s begin it

1)Shutdown the DBconsole and Isqlplus

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">$ emctl stop dbconsole $ isqlplusctl stop </span>

2) Shutdown the associated service on the node

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop service -d orcl -s orcl_taf -i orcl1</span>

3) Shutdown Database Instance and ASM instance on node (if present)

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop instance -d orcl -i orcl1 </span>

To stop ASM, use following command

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop asm -n blrraclnx1 </span>

4)Next step is to stop Nodeapps services on the node

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop nodeapps -n blrraclnx1</span>

Before proceeding to installing Oracle Clusterware Patch, let’s confirm if services have been stopped

HA Resource Target State ----------- ------ ----- <strong>ora.blrraclnx1.ASM1.asm OFFLINE OFFLINE ora.blrraclnx1.LISTENER1_BLRRACLNX1.lsnr OFFLINE OFFLINE ora.blrraclnx1.gsd OFFLINE OFFLINE ora.blrraclnx1.ons OFFLINE OFFLINE ora.blrraclnx1.vip OFFLINE OFFLINE</strong> ora.blrraclnx2.ASM2.asm ONLINE ONLINE on blrraclnx2 ora.blrraclnx2.LISTENER1_BLRRACLNX2.lsnr ONLINE ONLINE on blrraclnx2 ora.blrraclnx2.gsd ONLINE ONLINE on blrraclnx2 ora.blrraclnx2.ons ONLINE ONLINE on blrraclnx2 ora.blrraclnx2.vip ONLINE ONLINE on blrraclnx2 ora.orcl.db ONLINE ONLINE on blrraclnx2 <strong>ora.orcl.orcl1.inst OFFLINE OFFLINE</strong> ora.orcl.orcl2.inst ONLINE ONLINE on blrraclnx2 ora.orcl.orcl_taf.cs ONLINE ONLINE on blrraclnx2 <strong>ora.orcl.orcl_taf.orcl1.srv OFFLINE OFFLINE</strong> ora.orcl.orcl_taf.orcl2.srv ONLINE ONLINE on blrraclnx2

5)Set DISPLAY variable and execute runinstaller from Patch Directory

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 Disk1]$ ./runInstaller </span>

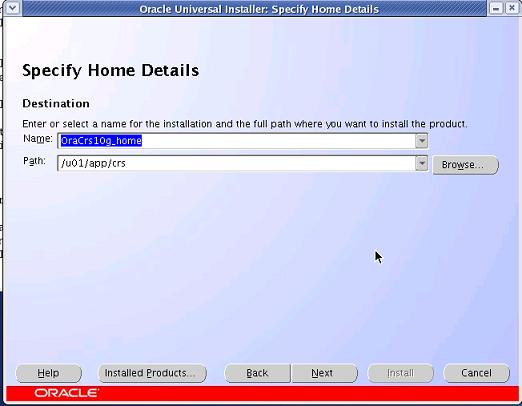

This will open OUI screen. Select Oracle Clusterware Home for Patching. Find below screenshot for same

This will automatically select all the nodes available in cluster and propogate patch binaries to the other node.

6) On the Summary screen, click Install.OUI will prompt you now to run, following two scripts as Root which will upgrade Oracle Clusterware

<span style="font-size: small; font-family: arial,helvetica,sans-serif;"># $ORA_CRS_home/bin/crsctl stop crs # $ORA_CRS_home/install/root102.sh </span>

Now we need to repeat the steps 1-4 and step 6 on Node 2. Step 5 is not required as binaries have been already copied over to node 2.

RAC Database Patching cannot be done in a rolling fashion and requires Database to be shutdown.

1)Shutdown the DBconsole and Isqlplus

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">$ emctl stop dbconsole $ isqlplusctl stop </span>

2) Shutdown the associated service for database

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop service -d orcl </span>

3) Shutdown Database Instance and ASM instance on node (if present)

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop database -d orcl </span>

To stop ASM, use following command on both the nodes

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop asm -n blrraclnx1 </span><span style=\"font-size: small; font-family: arial,helvetica,sans-serif;\">[oracle@blrraclnx1 ~]$ srvctl stop asm -n blrraclnx2</span>

4)Next step is to stop Listener on both the nodes

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop listener -n blrraclnx1 -l LISTENER1_BLRRACLNX1 </span><span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl stop </span><span style="font-size: small; font-family: arial,helvetica,sans-serif;">listener</span><span style="font-size: small; font-family: arial,helvetica,sans-serif;"> -n blrraclnx2 -l LISTENER1_BLRRACLNX2 </span>

5)Set DISPLAY variable and execute runinstaller from Patch Directory

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 Disk1]$ ./runInstaller </span>

This will open OUI screen. Select Database Home for Patching.

6) On the Summary screen, click Install.When prompted, run the $ORACLE_HOME/root.sh script as the root

user on both the nodes. On completion of this , we need to perform post installation steps.

7)Start listener and ASM Instance on both the nodes

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]$ srvctl start listener -n blrraclnx1 -l LISTENER1_BLRRACLNX1 [oracle@blrraclnx1 ~]$ srvctl start listener -n blrraclnx2 -l LISTENER1_BLRRACLNX2 [oracle@blrraclnx1 ~]$ srvctl start asm -n blrraclnx1 [oracle@blrraclnx1 ~]$ srvctl start asm -n blrraclnx2</span>

8)For Oracle RAC Installation, we need to set CLUSTER_DATABASE=FALSE before upgrading

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">[oracle@blrraclnx1 ~]sqlplus "/ as sysdba" SQL>startup nomount SQL> alter system set cluster_database=false scope=spfile; System altered. SQL>shutdown immediate; SQL>startup upgrade SQL>spool 10204patch.log SQL>@?/rdbms/admin/catupgrd.sql SQL>spool off</span>

Log file needs to be reviewed for any errors. catupgrd.sql took 42 minutes on my system. In case CLUSTER_DATABASE parameter is not set to False, you will get following error while starting database in upgrade mode

ORA-39701: database must be mounted EXCLUSIVE for UPGRADE or DOWNGRADE

We need to Restart the database now and run utlrp.sql.

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">SQL> SHUTDOWN IMMEDIATE SQL> STARTUP SQL> @?/rdbms/admin/utlrp.sql</span>

Confirm whether Database has been upgraded successfully by querying DBA_REGISTRY;

select comp_name,version,status from dba_registry;

Now set Cluster_database parameter to TRUE and start Database

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">SQL>alter system set cluster_database=true scope=spfile; SQL>Shutdown immediate; [oracle@blrraclnx1 ~]$ srvctl start database -d orcl [oracle@blrraclnx1 ~]$ srvctl start service -d orcl</span>

To upgrade DBConsole, run following command

<span style="font-size: small; font-family: arial,helvetica,sans-serif;">emca -upgrade db -cluster </span>

This completes the upgrade process.

Recent Comments