11.2.0.2 Patchset was released few days back. I decided to upgrade a test RAC database to 11.2.0.2 yesterday and was able to do it successfully. I will be documenting the steps here for easy reference

Environment Details

2 node RAC on Red Hat Enterprise Linux AS release 4 (Nahant Update 8), 64 bit

Software and Patches

1) Download Patch 9655006 – 11.2.0.1.2 for Grid Infrastructure (GI) Patch Set Update from MOS

2) Latest Opatch for 11.2 – 6880880 Universal Installer: Patch OPatch 11.2

You can refer to How To Download And Install OPatch [ID 274526.1]

3)11.2.0.2 Patchset files. You can find method to download them directly to server here

p10098816_112020_Linux-x86-64_1of7.zip – 11.2.0.2 Database Installation Files

p10098816_112020_Linux-x86-64_2of7.zip – 11.2.0.2 Database Installation Files

p10098816_112020_Linux-x86-64_3of7.zip – 11.2.0.2 Grid Installation Files

Install Latest Opatch

Apply Grid Infrastructure (GI) Patch Set Update – 9655006

[oracle@oradbdev01]~/software/11.2.0.2/psu% opatch prereq CheckConflictAgainstOHWithDetail -phBaseDir ./9655006 Invoking OPatch 11.2.0.1.3 Oracle Interim Patch Installer version 11.2.0.1.3 Copyright (c) 2010, Oracle Corporation. All rights reserved. PREREQ session Oracle Home : /oracle/product/app/11.2.0/dbhome_1 Central Inventory : /oracle/oraInventory from : /etc/oraInst.loc OPatch version : 11.2.0.1.3 OUI version : 11.2.0.1.0 OUI location : /oracle/product/app/11.2.0/dbhome_1/oui Log file location : /oracle/product/app/11.2.0/dbhome_1/cfgtoollogs/opatch/opatch2010-10-02_06-03-34AM.log Patch history file: /oracle/product/app/11.2.0/dbhome_1/cfgtoollogs/opatch/opatch_history.txt Invoking prereq "checkconflictagainstohwithdetail" Prereq "checkConflictAgainstOHWithDetail" passed. OPatch succeeded.<span style="font-family: Georgia, 'Times New Roman', 'Bitstream Charter', Times, serif; font-size: small;"><span style="line-height: 19px; white-space: normal;"> </span></span>

We need to use opatch auto to patch both Grid Infrastructure and RAC database home.It can be used to patch separately but I am going with patching both. This is great improvement over 10g CRS patch bundles as it had lot of steps to be run as root or oracle software owner. ( I had myself messed up once by running a command as root instead of oracle 🙁 ) Opatch starts with patching the database home and then patches the grid infrastructure home. You can stop the database instance running out of oracle home.(opatch asks for shutting down database, so in case you do not stop,don’t worry it will warn)

Note: In case you are on RHEL5/OEL5 and using ACFS for database volumes, it is recommended to use opatch auto <loc> -oh <grid home> to first patch the Grid home instead of patching them together

Unzip patch 965506 to directory say /oracle/software/gips2.It will create two directories for patch 9654983 and 9655006. Connect as root user and set the oracle home and Opatch directory . Stop the database instance running on the node

srvctl stop instance -d db11g -i db11g1

Next run opatch auto command as root

#opatch auto /oracle/software/gips2 Oracle Interim Patch Installer version 11.2.0.1.3 Copyright (c) 2010, Oracle Corporation. All rights reserved. UTIL session Oracle Home : /oracle/product/grid Central Inventory : /oracle/oraInventory from : /etc/oraInst.loc OPatch version : 11.2.0.1.3 OUI version : 11.2.0.1.0 OUI location : /oracle/product/grid/oui Log file location : /oracle/product/grid/cfgtoollogs/opatch/opatch2010-10-02_06-35-03AM.log Patch history file: /oracle/product/grid/cfgtoollogs/opatch/opatch_history.txt Invoking utility "napply" Checking conflict among patches... Checking if Oracle Home has components required by patches... Checking conflicts against Oracle Home... OPatch continues with these patches: 9654983 Do you want to proceed? [y|n] y User Responded with: Y Running prerequisite checks... Provide your email address to be informed of security issues, install and initiate Oracle Configuration Manager. Easier for you if you use your My Oracle Support Email address/User Name. Visit http://www.oracle.com/support/policies.html for details. Email address/User Name: You have not provided an email address for notification of security issues. Do you wish to remain uninformed of security issues ([Y]es, [N]o) [N]: Y You selected -local option, hence OPatch will patch the local system only. Please shutdown Oracle instances running out of this ORACLE_HOME on the local system. (Oracle Home = '/oracle/product/grid') Is the local system ready for patching? [y|n] y User Responded with: Y Backing up files affected by the patch 'NApply' for restore. This might take a while...

I have not copied the whole output. It asks you to specify MOS credentials for setting up Oracle Configuration Manager. You can skip it as mentioned above. You will find following errors in the end

OPatch succeeded. ADVM/ACFS is not supported on Redhat 4 Failure at scls_process_spawn with code 1 Internal Error Information: Category: -1 Operation: fail Location: canexec2 Other: no exe permission, file [/oracle/product/grid/bin/ohasd] System Dependent Information: 0 CRS-4000: Command Start failed, or completed with errors. Timed out waiting for the CRS stack to start.<span style="font-family: Georgia, 'Times New Roman', 'Bitstream Charter', Times, serif; font-size: small;"><span style="line-height: 19px; white-space: normal;"> </span></span>

This is a known issue and discussed in 11.2.0.X Grid Infrastructure PSU Known Issues [ID 1082394.1]

To solve this issue, connect as root user and execute following command from your Grid Infrastructure home

cd $GRID_HOME # ./crs/install/rootcrs.pl -patch 2010-10-02 06:59:54: Parsing the host name 2010-10-02 06:59:54: Checking for super user privileges 2010-10-02 06:59:54: User has super user privileges Using configuration parameter file: crs/install/crsconfig_params ADVM/ACFS is not supported on Redhat 4 CRS-4123: Oracle High Availability Services has been started.

Start oracle database instance on the node which has been patched

srvctl start instance -d db11g -i db11g1

Repeat the above steps for node 2.It took me 40 minutes for each node to complete opatch auto activity.Once it is done,execute following from one node for all databases to complete patch installation

cd $ORACLE_HOME/rdbms/admin

sqlplus /nolog

SQL> CONNECT / AS SYSDBA

SQL> @catbundle.sql psu apply

SQL> QUIT

This completes patching of Grid Infrastructure Patch or PSU2.

Upgrading Grid Infrastructure home to 11.2.0.2

Ensure following environment variables are not set :ORA_CRS_HOME; ORACLE_HOME; ORA_NLS10; TNS_ADMIN

# echo $ORA_CRS_HOME; echo $ORACLE_HOME; echo $ORA_NLS10; echo $TNS_ADMIN

Strating with 11.2.0.2, Grid infrastructure (Clusterware and ASM Home) upgrade is out of place upgrade i.e we install in new ORACLE_HOME. Unlike database home we cannot perform an in-place upgrade of Oracle Clusterware and Oracle ASM to existing homes.

Unset following variables too

$ unset ORACLE_BASE

$ unset ORACLE_HOME

$ unset ORACLE_SID

Relax permissions for GRID_HOME

#chmod -R 755 /oracle/product/grid

#chown -R oracle /oracle/product/grid

#chown oracle /oracle/product

Start the runInstaller from the 11.2.0.2 grid software directory.

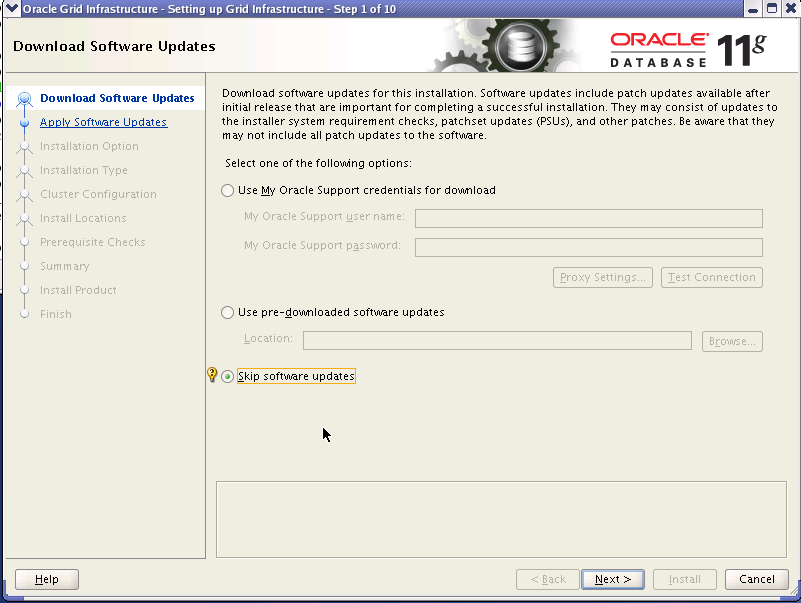

On first screen, it will ask for MOS details. I have chosen skip Software update

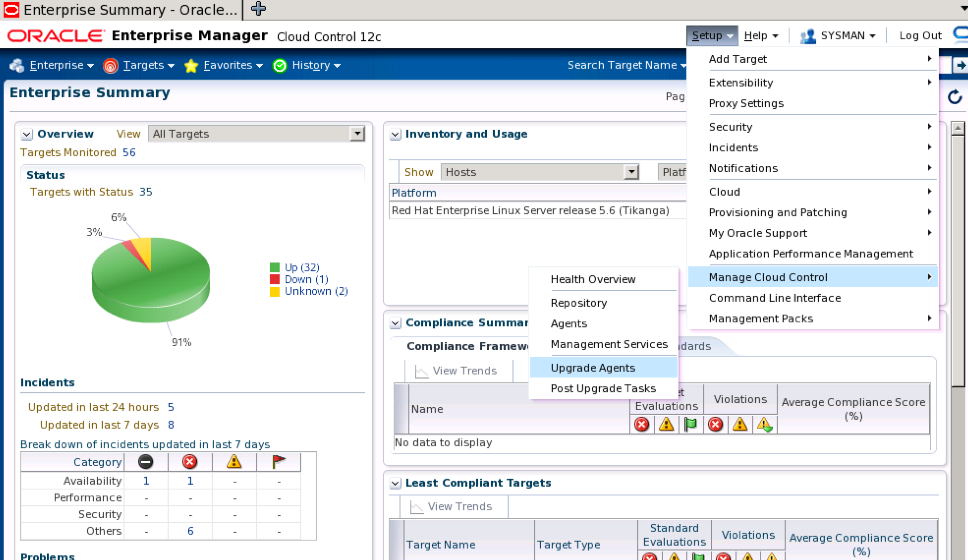

Since we are upgrading existing installation, choose “Upgrade Oracle Grid Infrastructure or Oracle Automatic Storage Management”. This will install software and also configure ASM

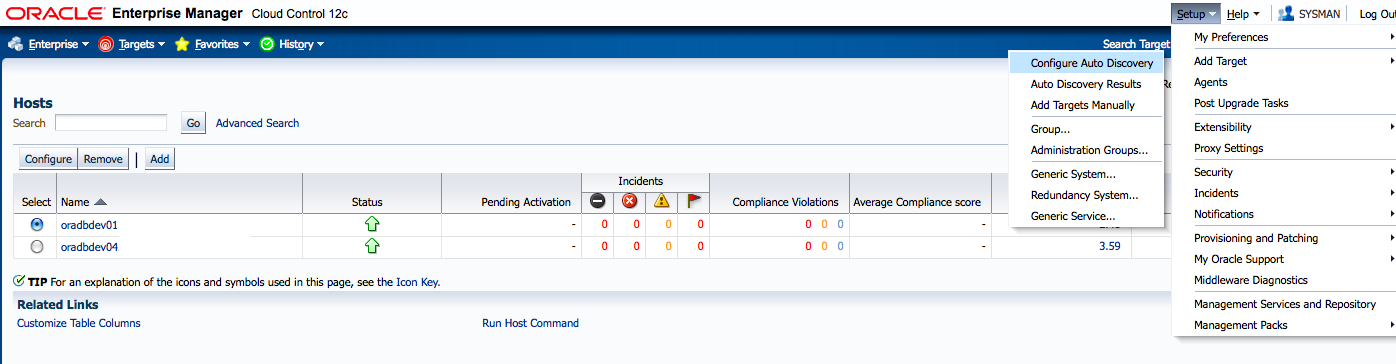

Next screen displays nodes which OUI will patch

Select the OSDBA,OSASM and OSOPER group. I have chosen dba

Since I have chosen all 3 groups to be same, it gives warning. Select Yes to continue

Specify the grid software installation directory. I have used /oracle/product/oragrid/11.2.0.2 (Better to input release as we will be having out of place upgrades for future patchsets too)

OUI reports some issues with swap size ,shmmax and NTP. You can fix them or choose to ignore. OUI can create a fixup script for you.

Installation starts copying file. After files have been copied to both nodes, it asks for running the rootupgrade.sh script from all nodes

Stop the database instance running from the node at this time and then run script as root. Please note that ASM and clusterware should not be stopped as rootupgrade.sh requires them to be up and takes care of shutting down and starting with new home.In my case, running rootupgrade.sh successfully succeeded on node 1 but it hung on node 2 . I did a cancel and re-ran it. Pasting the contents from node 2 second run

[root@oradbdev02 logs]# /oracle/product/oragrid/11.2.0.2/rootupgrade.sh

Running Oracle 11g root script...

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /oracle/product/oragrid/11.2.0.2

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /oracle/product/oragrid/11.2.0.2/crs/install/crsconfig_params

clscfg: EXISTING configuration version 5 detected.

clscfg: version 5 is 11g Release 2.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-1115: Oracle Clusterware has already been upgraded.

ASM upgrade has finished on last node.

Preparing packages for installation...

cvuqdisk-1.0.9-1

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

On clicking next in OUI, it reported that cluvfy has failed and some of components are not installed properly. To verify I ran cluvfy manually. Pasting content for which it failed

./cluvfy stage -post crsinst -n oradbdev01,oradbdev02 -verbose <span style="font-family: Georgia, 'Times New Roman', 'Bitstream Charter', Times, serif; line-height: 19px; white-space: normal; font-size: 13px;"> <pre style="font: normal normal normal 12px/18px Consolas, Monaco, 'Courier New', Courier, monospace;">-------------------------------truncated output -------------------

ASM Running check passed. ASM is running on all specified nodes Checking OCR config file "/etc/oracle/ocr.loc"... ERROR: PRVF-4175 : OCR config file "/etc/oracle/ocr.loc" check failed on the following nodes: oradbdev02:Group of file "/etc/oracle/ocr.loc" did not match the expected value. [Expected = "oinstall" ; Found = "dba"] oradbdev01:Group of file "/etc/oracle/ocr.loc" did not match the expected value. [Expected = "oinstall" ; Found = "dba"] Disk group for ocr location "+DG_DATA01" available on all the nodes OCR integrity check failed -------------------------------truncated output ------------------- Checking OLR config file... ERROR: PRVF-4184 : OLR config file check failed on the following nodes: oradbdev02:Group of file "/etc/oracle/olr.loc" did not match the expected value. [Expected = "oinstall" ; Found = "dba"] oradbdev01:Group of file "/etc/oracle/olr.loc" did not match the expected value. [Expected = "oinstall" ; Found = "dba"] Checking OLR file attributes... ERROR: PRVF-4187 : OLR file check failed on the following nodes: oradbdev02:Group of file "/oracle/product/oragrid/11.2.0.2/cdata/oradbdev02.olr" did not match the expected value. [Expected = "oinstall" ; Found = "dba"] oradbdev01:Group of file "/oracle/product/oragrid/11.2.0.2/cdata/oradbdev01.olr" did not match the expected value. [Expected = "oinstall" ; Found = "dba"] OLR integrity check failed -------------------------------truncated output ------------------- Checking NTP daemon command line for slewing option "-x" Check: NTP daemon command line Node Name Slewing Option Set? ------------------------------------ ------------------------ oradbdev02 no oradbdev01 no Result: NTP daemon slewing option check failed on some nodes PRVF-5436 : The NTP daemon running on one or more nodes lacks the slewing option "-x" Result: Clock synchronization check using Network Time Protocol(NTP) failed PRVF-9652 : Cluster Time Synchronization Services check failed

We see that cluvfy is reporting error that it expected oinstall group but found dba group. I had not specified install group during installation, so can ignore it.For NTP you can correct it by setting following in ntpd.conf and restart the ntpd daemon

grep OPTIONS /etc/sysconfig/ntpd

OPTIONS=”-u ntp:ntp -p /var/run/ntpd.pid -x”

Refer How to Configure NTP to Resolve CLUVFY Error PRVF-5436 PRVF-9652 [ID 1056693.1]

At this moment Grid Infrastructure has been successfully upgraded to 11.2.0.2. Next we will upgrade the RAC database home. You can refer to Steps to Upgrade 11.2.0.1 RAC to 11.2.0.2

You said that to upgrade existing 11.2.0.1 Oracle Grid Infrastructure installations to Oracle Grid Infrastructure 11.2.0.2, you must first do at least one of the following:

– Patch the release 11.2.0.1 Oracle Grid Infrastructure home with the fix for bug 9413827.

– Install Oracle Grid Infrastructure Patch Set 1 (GIPS1) or Oracle Grid Infrastructure Patch Set 2 (GIPS2).

Since 11.2 patches are full installs, why do you need to patch anything?

Thanks,

Evan

Evan,

You need to apply these patches only in case of upgrading and not for new installs. If you don’t install above patches, upgrade would fail with errors. One of the issue is listed in Note ID 10036834.8

Cheers

Amit

After applying gips2 to GI and RDBMS Home did you check the version in database or opatch lsinventory. per my understanding it should change the last digit.

Thanks

Dharmesh,

Version did not change

SQL> select version from V$instance;

VERSION

—————–

11.2.0.1.0

I also checked opatch lsinventory and still version is 11.2.0.1.

-Amit

Dharmesh,

The database version is not changed, but the application of the PSU updates the registry$history view where you can see the last digit updated with the recent PSU applied.

Thanks,

Saurabh Sood

askdba.org/weblog

Nice document. I did few test upgrades from 10.2.0.4 to 11.2.0.1, 11.2.0.1 to 11.2.0.2 and 10.2.0.4 to 11.2.0.2.

While doing upgrade from 10204, I observed that voting disk permission needs to be changed from 644 to 640. With 11gR2, it seems that like OCR, voting disk too should have 640 which was not the case with 10gR2.

Thanks Ritesh for the info. I used ASM for placing OCR/Voting disk, so wasn’t aware of the permission step. I think you should publish steps for 10g -> 11gr2

-Amit

Hi Ritesh, As you said you did 10.2.0.4 to 11.2.0.2 upgrade. Q1) Is it a out of place upgrde? Q2)If it is a out of place upgrade,then which option we should choose in grid/.runIntaller ? Q3) And if it is 11.2.0.1 to 11.2.0.2 upgrade is it a out of place upgrade ?

Please response me as soon as possible. As i was so confussed about the upgradation to choose which meathod.

Please provide me the Installation document that you prepared for your upgradation and the step you followed.

You post post here or send to [email protected] .

It will grate help full to me.

Note:- I don’t know much about RAC and still i had do complete this upgradation job by anyway. Please Help me.

That’s why i need the step by step procedure.

And little bit confuse about SCAN , as 10g don’t had SCAN and in 11g is availble.

Thank You , I appreciate it .

Bhasker,

Step by Step process is documented on by blog. I hope that should answer most of your queries.

http://www.ritzyblogs.com/OraTalk/PostID/119/Getting-started-with-11-2-0-2-The-Upgrade

Regards

Ritesh

This is a great walkthrough, but have you attempted downgrading from 11.2.0.2 back to 11.2.0.1.2?

My first upgrade attempt failed due to the Multicast not being available, and now I can’t seem to get back to 11.2.0.1.2. (Every downgrade seems to think I’m going back to a pre-11.2 version)

Hi Tyfanie,

I haven’t tried downgrading my database version. But I was looking at catdwngrd.sql and it seems to query registry$ to find previous version

20:44:04 SQL> select PRV_VERSION FROM registry$ WHERE cid=’CATPROC’;

PRV_VERSION

——————————

11.2.0.1.0

So you should be able to go back to 11.2.0.1.0 version

-Amit

Hi Guys,

Any one has steps for reverting 11202 GI back to 112012 due to faied upgrade like rootupgrade.sh failure…

Was not able to find in MOS.

Thanks

Dharmesh,

You may want to try $GRID_HOME/crs/install/rootcrs.pl -downgrade -force and if that not works then rootcrs.pl -deconfig -force. There is a detailed document on Metalink that explains various cases of failures with rootupgrade.sh. I simulated lot of failures with rootupgrade during my test cases and found the document works pretty good.

How to Proceed from Failed Upgrade to 11gR2 Grid Infrastructure (CRS) [ID 969254.1]

Regards

Ritesh

Thanks Ritesh. Sorry for late reply. The note talks about pre 11gr2 downg but i guess it is equally applicable for 11202 to 11201. Ritesh Can you PLEASE provide full details of 11202 to 11201 GI downg. Appreciate it.

General regarding 11201 to 11202 :

1)If applicable refer to 11.2.0.2 Grid Infrastructure Install or Upgrade may fail due to Multicasting Requirement [ID 1212703.1]

2)even after applying gips2 to GI, later when running rootupgrade.sh i got message in my env “Failed to add (property/value):(‘OLD_OCR_ID/’-1′) for checkpoint:ROOTCRS_OLDHOMEINFO.Error code is 256” but rootupgrade.sh continued to success and activeversion was 11202. So that message was ignored.

Thanks,

Dharmesh P Parekh.

Hi, thank you for that walkthrogh. I have a question to the part of the install of the grid psu.

When I run the opatch command, the following error occurs:

Prerequisite check “CheckActiveFilesAndExecutables” failed.

The details are:

Following executables are active :

/u01/app/11.2.0/grid/bin/oracle

Is this normal behavior because when I go forward the installation finished successfully.

Christian,

I checked my installation notes and I didnt encounter any such messages. Most likely your database was running when you started applying this patch.

Cheers

Amit

Hi – If 11.2.0.2 requires an out-of-place installation, why do i need to apply 9655006 to the existing gi and dbms homes?

Thanks,

Bev

Bev,

As mentioned earlier in comment sections, this is to take care of existing bug. Refer MOS note 10036834.8 for details

Cheers

Amit

Hi Amit,

thanks for your reply. I stoped the instance with srvctl on that node I was going to patch.

The only difference in the execution of the opatch command is that I need to add the -oh parameter. Otherwise the opatch command failed. Maybe this is the reason for the error messages. The usage of the -oh parameter is mentioned in the MOS note 1082394.1.

Did you set some environment variables for the root user before you start the opatch auto command?

I did not set any environment while running opatch auto. It is supposed to take care of it.

Thank you Amit. Still a little confused read 10036834.8 and it says if available install patch 9655006 to the 11.2.0.1 GI home before upgrading to 11.2.0.2 – it did not mention the dbms home.

Bev,

I think note should be corrected to include both homes. As you can see I have used opatch auto which patched both homes.

Amit,

In that case, I think you can put comment on the note for including “Both” homes.

Thanks.

Awesome job. I can’t thank you enough for putting this blog up as it made my upgrade process go super easy. tahnks again.

Thanks Omkar for nice comment

Cheers

Amit

Installed the GI and it got clear down to the root.sh scripts when it died letting me know that Bug 9413827 needed to be applied prior. I clicked OK and the install died. I applied the patch to GI and RDBMS, but now when I try to rerun the install I get “INS 40406 – The installer detects no existing Oracle GI software on the system”.

In haste the files in 11.2.0.2 were deleted. We edited oraInventory/ContentsXML/inventory.xml and removed any reference to 11.2.0.2 including the new grid home. We are still receiving the INS 40406 error. Now what? Can we just install the grid software in 11.2.0.2/grid? How do we upgrade from there?

Any advice is welcomed.

Is anyone running 10g databases with the 11202 upgrade? Since it’s out-of-place, all of my databases are looking for crs_stat in the $OGH. Even after I recreate them in CRS… I can’t find this dependancy.

The error I’m getting is: PRKA-2019 : Error executing command “$Old_Grid_HOME/bin/crs_stat”. File is missing.

Anyone else see this?

@DHARMESH: Because I was still using Raw vote disks/ocr, I ended up having to go back to a backup of my entire server to get back to 11.2.0.1. I am going to try it again & see what happens.

I had similar issue. Check inventory.xml. CRS=true may still be pointing to old CRS home. Point it to new 11g CRS home and you should be good.

Hi Ritesh,

I started upgradation 10.2.0.4 to 11.2.0.2. By choosing the INSTALL GRID INFRASTRUCTURE SOFTWARE option i installed on node 1 Linux1 and runed the root.sh scrip from Node1 as instructed by the Installer. After run the root.sh script from root the out put is like this

To configure Grid Infrastructure for a Cluster execute the following command:

/grid/product/11.2.0.2/crs/config/config.sh

This command launches the Grid Infrastructure Configuration Wizard. The wizard also supports silent operation, and the parameters can be passed through the response file that is available in the installation media.

By running the config.sh , choosed the upgrade option .

On the next step …

It shown the error like this

[INS-42016] The current Grid home is not registered in the central inventory on the following nodes: [linux2]

I got not much help from web and metalink.

Please help me in this …

Thanks,,,,

Bhasker.

Hi All,I started upgradation 10.2.0.4 to 11.2.0.2. By choosing the INSTALL GRID INFRASTRUCTURE SOFTWARE option i installed on node 1 Linux1 and runed the root.sh scrip from Node1 as instructed by the Installer. After run the root.sh script from root the out put is like this

To configure Grid Infrastructure for a Cluster execute the following command:

/grid/product/11.2.0.2/crs/config/config.sh

This command launches the Grid Infrastructure Configuration Wizard. The wizard also supports silent operation, and the parameters can be passed through the response file that is available in the installation media.

By running the config.sh , choosed the upgrade option .

On the next step …

It shown the error like this

[INS-42016] The current Grid home is not registered in the central inventory on the following nodes: [linux2]

I got not much help from web and metalink.

Please help me in this …

Thanks,,,,

Bhasker.

You saved me a load to work Amit. This was right on the money. Thanks so much. It is amazing to me that Oracle would release a product with documentation so confusing. Not to mention the errors during upgrade that have no explanation. I was running ragged until I saw your post.

Hi Amit,

we have 11.2.0.1 GI and 10.2.0.5 DB.I just want to upgrade cluster and ASM but not the DB to 11.2.0.2 and keep db in existing version 10.2.0.5 is there any patch we have for that to JUST upgrade cluster and ASM.

In this case you need to install grid and asm. Copy the ASM parameter file to new grid_home . You can start db with 10.2.0.5 db and add db to ocr using 10g srvctl.

-Amit

Hi Amit,

Facing strange issue.

Applied patch 9413827 in 11.2.0.1 grid home and installed 11.2.0.2 in the new home but rootupgrade.sh fails.

====

[oracle@node1 ~]$ /u01/app/11.2.0/grid/OPatch/opatch lsinv -local

Invoking OPatch 11.2.0.1.6

Oracle Interim Patch Installer version 11.2.0.1.6

Copyright (c) 2011, Oracle Corporation. All rights reserved.

Oracle Home : /u01/app/11.2.0/grid

Central Inventory : /u01/app/oraInventory

from : /etc/oraInst.loc

OPatch version : 11.2.0.1.6

OUI version : 11.2.0.1.0

Log file location : /u01/app/11.2.0/grid/cfgtoollogs/opatch/opatch2011-08-26_17-58-29PM.log

Lsinventory Output file location : /u01/app/11.2.0/grid/cfgtoollogs/opatch/lsinv/lsinventory2011-08-26_17-58-29PM.txt

——————————————————————————–

Installed Top-level Products (1):

Oracle Grid Infrastructure 11.2.0.1.0

There are 1 products installed in this Oracle Home.

Interim patches (1) :

Patch 9413827 : applied on Fri Aug 26 17:27:28 BST 2011

Unique Patch ID: 12900951

Created on 17 Aug 2010, 03:25:41 hrs PST8PDT

Bugs fixed:

9413827, 9706490

——————————————————————————–

OPatch succeeded.

Rootupgrade output

====

The fixes for bug 9413827 are not present in the 11.2.0.1 crs home

Apply the patches for these bugs in the 11.2.0.1 crs home and then run rootupgrade.sh

/u01/app/11.2.0/11.2.0.2/grid/perl/bin/perl -I/u01/app/11.2.0/11.2.0.2/grid/perl/lib -I/u01/app/11.2.0/11.2.0.2/grid/crs/install /u01/app/11.2.0/11.2.0.2/grid/crs/install/rootcrs.pl execution failed

thanks,

Dev

Hi Dev,

I tried this article using GI Patch bundle 2. I found this hit “http://juliandyke.wordpress.com/2010/10/10/upgrading-oracle-11-2-0-1-rac-clusters-to-11-2-0-2-on-linux-x86-64/”

Although the script is requesting fixes for 9413827, searches for this bug on MOS reveal that it is actually included in bug 9655006 which turns out to be the 11.2.0.1.2 PSU (not 11.2.0.1.1 – thanks Jason) which must be downloaded from MOS. The download file is

p9655006_112010_Linux-x86-64.zip

I was installing oracle 11gR2 RAC. I followed all the steps listed in the http://www.oraclebase.com for installing oracle 11gR2 RAC. I installed the grid infrastructure, and everything is going fine like all SSH is setup and CRS is running. When I am installing the oracle database 11gR2. I had to choose the option of creating RAC database not single instance database, but there it should say rac1 and rac2 nodes. It is only showing rac1. I have rac1 and rac2 nodes. Please, let me know what should I do to correct this problem so rac2 can be seen on the window in the dbca.

Faraz,

Check if inventory is properly updated and shows both node. Also check if olsnodes command returns both nodes..

-Amit

Hello. olsnodes -n only return rac1 and 1 not rac2. I do not know why is not showing up there. inventory is updated. I wanted to ask help from you like please if you can use teamviewer to see the problem.

Faraz,

I wont be able to come online and provide that kind of support. I guess you will have to contact oracle support and do OWC for that.

Are you sure you ran root.sh from node 2 also? If not then please run it. Can also see if CRS home is copied to node 2. If yes then most likely root.sh is not run from node 2

-Amit

Faraz,

You can also try running “./runInstaller -updateNodeList”

(check the full syntax at metalink) to add node to inventory and let inventory know about the new cluster_nodes.

-Saurabh Sood

Hello. I tried reinstalling three times already. I used the addnode.sh and updatenodelist commands but it is not working. I cannot able to get rac2 node listed in the oracle 11g database software installation for the RAC database option. The oracle 11g grid infrastructure was successful.

Faraz,

Can you show us the command that you are using to add node list and the error that you received!!!!

Thanks

Saurabh Sood

Hello. Saurabh. I cannot post it here. Do you have skype?

Faraz,

I think you can raise a SR with Oracle Support in that case..

Thanks

Saurabh Sood

I am not working for the company now. It is at home.

I am having the problem with the VirtualBox. I was following http://www.oraclebase.com for oracle 11gR2 RAC on linux 5 using virtual box. I need to manually clone the first virtual machine. I was creating using the clone option before. ASM disks somehow was not get shared and on two nodes instead of creating one cluster it was creating separate cluster on each node. The VBoxManage command do not seem to work like it discussed on the manual.

In case of [INS-40406], look here:

http://www.usn-it.de/index.php/2012/04/02/oracle-clusterware-ins-40406-the-installer-detects-no-existing-oracle-grid-infrastructure-software-on-the-system/